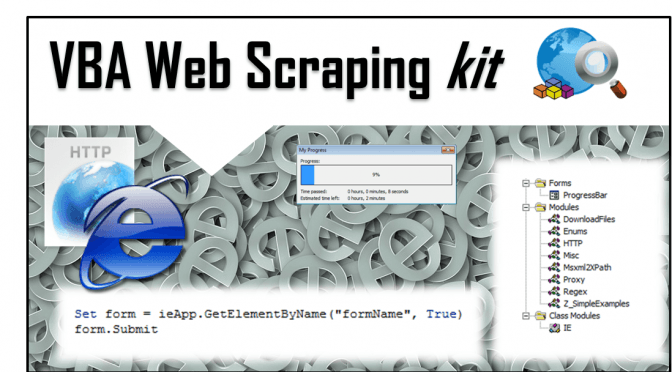

I am proud to present the next Kit coming from AnalystCave.com! The Web Scraping Kit is a simple kit for VBA Web Scrapers, contains a set of ready examples for different scraping scenarios. The kit is equipped with several tools letting you leverage HTTP GET&POST, IE, proxies, XPath, Regex and more Web Scraping tools. Get […]

Tag: Html

Web Scraping Tutorial

For years I have been reaching out to Web Scraping in order to download / scrape web content, however only recently have I really wanted to dive deep into the subject to really be aware of all the techniques out there. Ranging from the simple Excel “From Web” feature to simulating browser interaction – there […]

Like VBA? You will love HTA! (HTA example using VBS)

Comfortable with VBA? Your clients/users need VBA solutions but want them to be lightweight GUI, not cumbersome Excel Workbooks with Macro restrictions? Well why not try HTML Application (HTA)? What’s HTA? It’s a simple HTML application embedded with Visual Basic script or Javascript, or even both if needed! Don’t see the opportunities yet? Imagine a […]

Multithreaded browser automation (VBA Web Scraping)

Web Scraping is very useful for getting the information you need directly off websites. Sometimes however simple browser automation is not enough in terms of performance. Having created both the IE and Parallel classes I decided to jump an opportunity of creating a simple example of how mulithreaded browser automation can be achieved. Daniel Ferry […]

Simple class for browser automation in VBA

Web browser automation (using Microsoft’s Web Browser) is not an easy task in VBA when doing some Web Scraping. Excel is certainly a great tool for building your web automation scripts but at every corner you find some obstacles. For me the most challenging was always the nondeterministic state of the IE browser control whenever […]