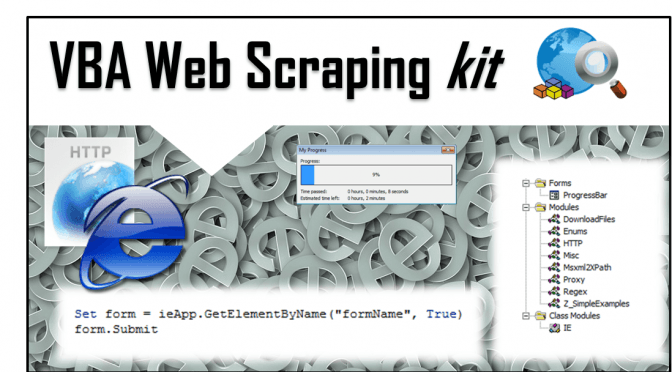

I am proud to present the next Kit coming from AnalystCave.com! The Web Scraping Kit is a simple kit for VBA Web Scrapers, contains a set of ready examples for different scraping scenarios. The kit is equipped with several tools letting you leverage HTTP GET&POST, IE, proxies, XPath, Regex and more Web Scraping tools. Get […]

Tag: Scraping

Web Scraping Proxy HTTP request using VBA

Visual Basic for Application (VBA) is great for making your first steps in Web Scraping as Excel is ubiquitous and a great training arena to learn Web Scraping. Web Scraping comes down to making HTTP requests to websites. At some point however you will find that some websites will cut you off or prevent multiple […]

Multithreaded browser automation (VBA Web Scraping)

Web Scraping is very useful for getting the information you need directly off websites. Sometimes however simple browser automation is not enough in terms of performance. Having created both the IE and Parallel classes I decided to jump an opportunity of creating a simple example of how mulithreaded browser automation can be achieved. Daniel Ferry […]

Simple class for browser automation in VBA

Web browser automation (using Microsoft’s Web Browser) is not an easy task in VBA when doing some Web Scraping. Excel is certainly a great tool for building your web automation scripts but at every corner you find some obstacles. For me the most challenging was always the nondeterministic state of the IE browser control whenever […]

Excel Scrape HTML Tool added to the Scrape HTML Add-In

As I mentioned I am planning to extend the Scrape HTML Add-In with additional tools and functionalities. Scraping HTML content should not require any VBA coding – this rule is guiding the further development of this tool. See the latest version of the Excel Scrape HTML Add-In here: Excel Scrape HTML Add-In Adding to the […]